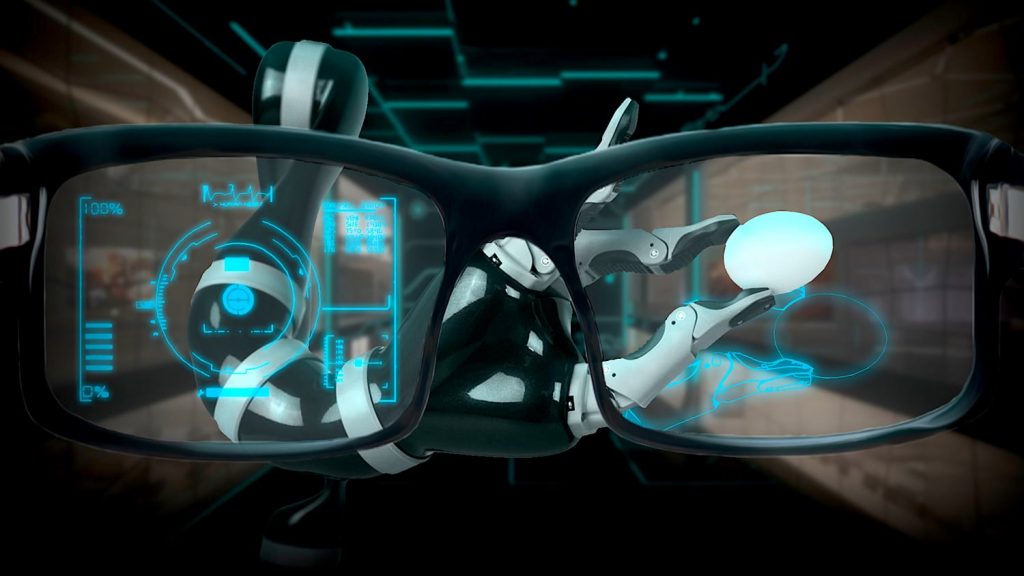

Brain-controlled robotic arm in augmented reality

A new robotic arm system has just been tested on ten people. his privacy? It is directly controlled by the user’s brain, via an interface displayed in augmented reality.

Recently, a team of Chinese researchers from Hebei University of Technology and several other institutions made the breakthrough. It can make it possible to create practical tools to facilitate the daily lives of people with disabilities or mobility difficulties.

Brain-controlled machines haven’t been in the realm of science fiction for long: “” In recent years, brain-controlled robotics have made more and more progress through the development of robotic arms, brain science and information decoding technology. Zhiguo Luo, one of the researchers responsible for this study, explains to specialized media Tech explore.

However, scientists have identified gaps in the application of this type of technology, which they sought to fill with their new approach. ” Disadvantages such as low flexibility limit its wide application. We aim to enhance the lightness and comfort of the brain-controlled robotic arms.”, they explain.

To lighten the device necessary to use this brain-controlled “arm”, the researchers chose to create a human-machine interface based on augmented reality. This technology allows, through glasses, in this case, to integrate virtual elements into a real environment: to somehow combine the real and the virtual. In fact, most previous brain-controlled arm experiments have used conventional control screens.

For the research team, this had the drawback of having to resort to a fixed chassis, which allows less freedom of movement, but not only. In fact, unlike a traditional screen, an augmented reality screen allows the user to focus on a single environment, rather than constantly shifting his or her attention from the screen to reality. That is why their innovations allow, in their opinion, greater “flexibility” and fluidity of use.

How does brain control work?

To make this arm move according to the user’s will, Chinese scientists used a brain control interface. That is why they resort to visual ability evoked when equilibrium”, in English, ” Steady-state visually evoked potentials », hence its acronym, SSVEP. To explain it more simply, these are the natural response signals to visual stimuli given at specific frequencies. By sensing these frequencies, via judiciously placed electrodes, it becomes possible, in this case, to know when a person is fixing a certain point. Thus, to launch an interaction with the machine at that time. This creates a direct information channel between the brain and the organ, without going through motion interaction.

Another improvement that scientists have made with this project is the use of the asynchronous control method. Any system that does not rely on strict time windows, but is activated only when the user decides to do so. This more flexible method makes it possible to adapt to individuals in a personal way, which, according to the researchers, was not so far in this type of innovation. With an average interface display time of 2.04 seconds to select the correct command, the asynchronous control has a positive effect on visual fatigue.

So far, the team has conducted tests on 10 healthy people, in a relatively comfortable environment, as well as online tests, and achieved satisfactory results, with a success rate of 94.97%. Despite this, the achieved performance remained inferior to tests performed with interfaces on conventional screens, for several reasons.

New ways to explore

Augmented reality, displayed in the real world, brings more visual distractions. The screen is also lower in contrast and less bright and therefore potentially less exciting to the human eye. Finally, sometimes it has a longer update time.

So the researchers have cut short their work to continue their explorations. In the future, they want to start conducting tests with people with disabilities, in order to verify the reliability and practicality of their innovations in concrete scenarios of their everyday and social life. They also intend to continue refining the technological devices that are being packaged. The next step: real life? ” Our approach contributes to improving the practicality of a brain-controlled robotic arm and accelerating the application of this technology in real life.”, I confirm en tout cas Zhiguo Luo.

Source : Journal of Neuroengineering

“Organizer. Social media geek. General communicator. Bacon scholar. Proud pop culture trailblazer.”