Google doesn’t want to be fooled anymore

Google wants to help you understand and tell if the image was generated by artificial intelligence. To do this, a label will be added to each respective content. The idea is to give the image more transparency and context.

Google wants to develop more ethical and transparent AI. As we know, sometimes some of the images generated by artificial intelligence are so realistic that ordinary humans may confuse them with a real photo. Tools like Midjourney or Dall-E produce impressive, sometimes confusing, shots.

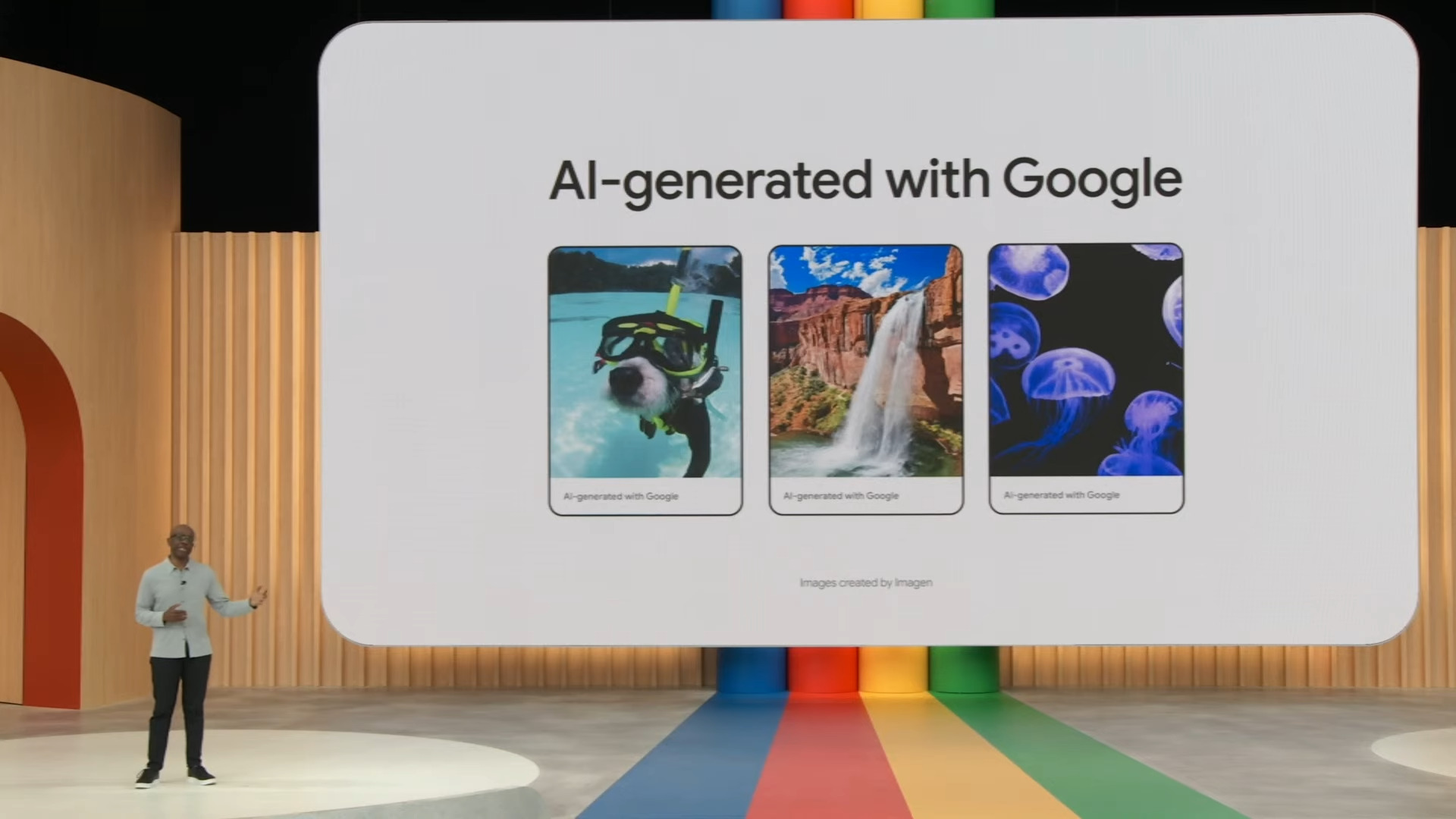

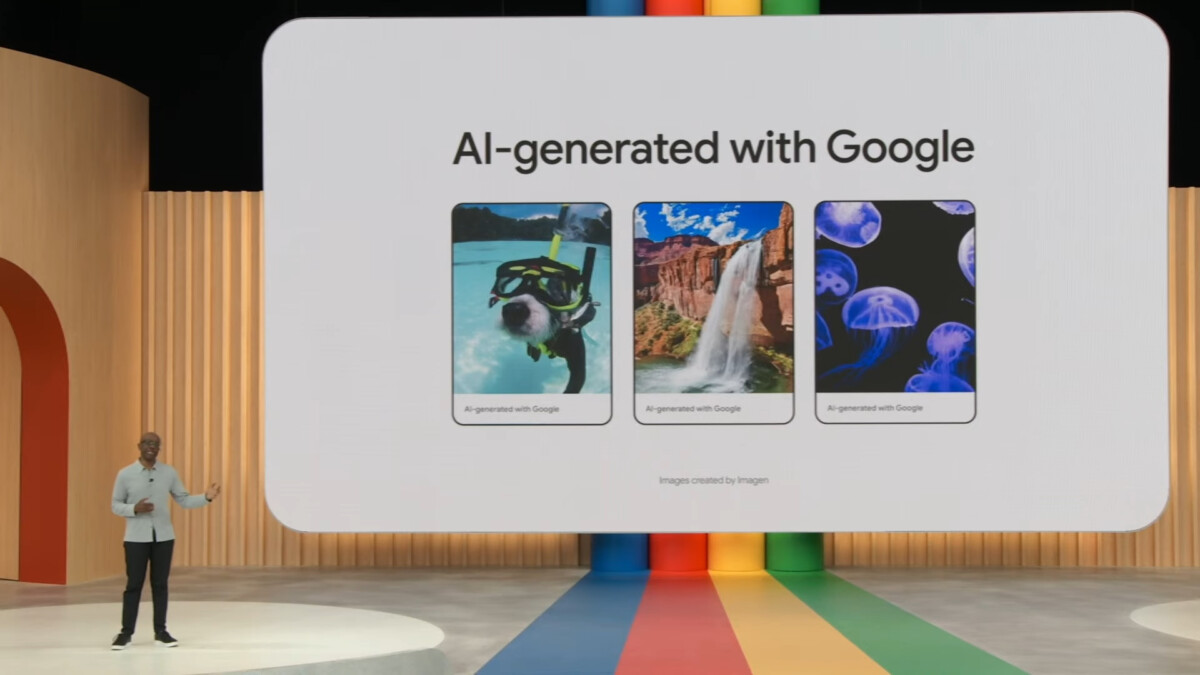

Watermark in the menu

To combat all this, and provide more context for users, Google will publish a label, a Watermark, which will appear on every image generated by its artificial intelligence. During the Google I / O 2023 conference, Maktoob indicated ” Artificial intelligence was created with Google Shown on the examples shown.

Also, Mountain View will give all users the opportunity to add this watermark to their AI-generated image, always with the goal of transparency towards all Internet users. Also a good way to deal with competing tools and set an example in terms of ethics and responsibility.

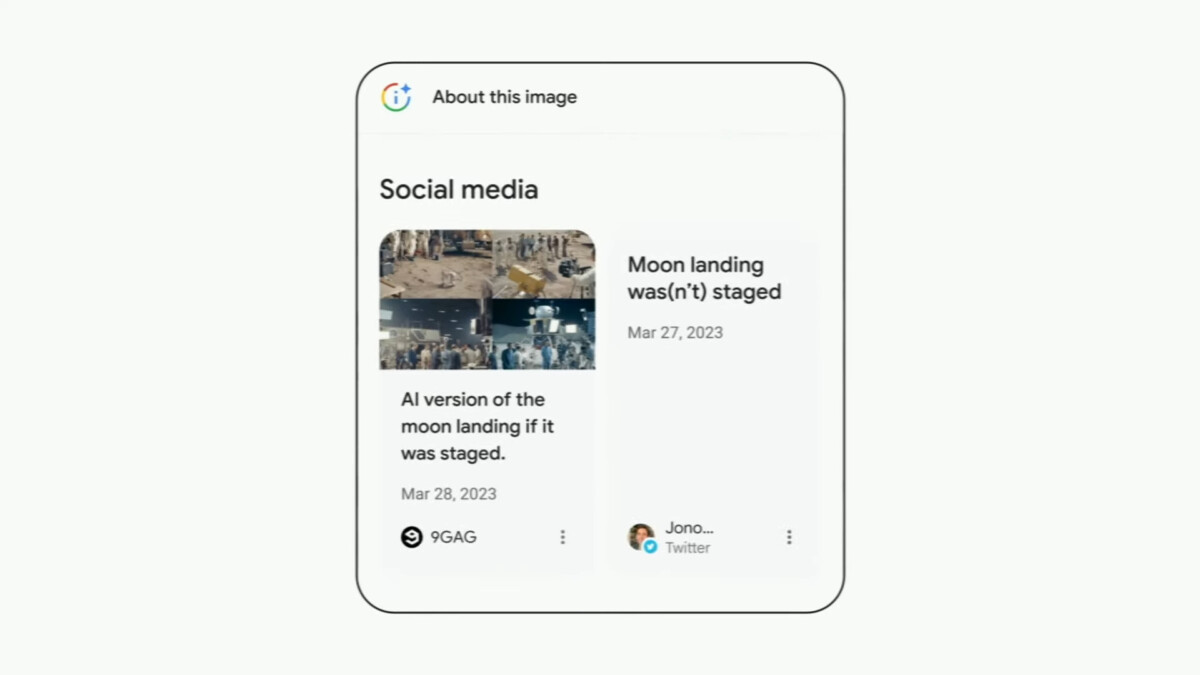

It is best to check the origin of the image

The California giant also wants to help you better verify the origin of the image. button ” about this photo “, also ” about this photo In French, it will allow to know where and when said image appears on the web. Is it easy to find them, on what sites, what articles, what they were about Fact check? In short, the idea is to have a better history of digital photos to avoid being fooled.

These new features will arrive in the coming months.

To follow us, we invite you to Download our Android and iOS app. You can read our articles and profiles and watch the latest videos on YouTube.

“Incurable web evangelist. Hipster-friendly gamer. Award-winning entrepreneur. Falls down a lot.”