Microsoft isolates Google and collaborates with the new Meta model of artificial intelligence

The second generation of the Meta AI language model comes just five months after the first. The software is still free and open source, but Facebook’s parent company is now developing it in partnership with Microsoft. Google, with LaMDA, better watch out.

Unlike Google or OpenAI, Meta Group does not yet have its own conversational tool for the general public, augmented by artificial intelligence and known to everyone. But since February, he has had conversational language. In other words, the same engine that makes it possible to develop tools, from text generation to dynamic generation, through more complex programs, mathematical decisions, code, tools for scientific research, and more.

LLaMA 2 was just announced through a press release on July 18th, and it is already the second generation of the language model. Contrary to rumors financial times Over the past few hours, Meta has not made its model available for payment, which remains free and open source for researchers and businesses. We include model weights, pre-trained model source code, and exact versions., can we read. understand through “exact versions”all programs that will use the language model for specific uses and data.

The announcement of the post to all LLaMA 2 comes just a week after Bard, a Google chat proxy supposed to rival ChatGPT, was widely publicized (especially in France). The fact that Microsoft is choosing to sign a partnership with Meta has its own surprising small impact, as the tech giant has already invested in OpenAI and is also using its services. So Google is still a little more isolated.

Microsoft, preferred partner

The Meta appears to be more geared toward professional use (ChatGPT is working on a pro version too) and that has pleased Microsoft, who have joined forces to become a “Premium Partner”. So there is no marketing for LLaMA, but rather a new arrival for Microsoft Azure customers and its AI model catalog. In the same way, LLaMA will be optimized to run locally on Windows. Windows developers will be able to use Llama by targeting DirectML runtime provider via ONNX Runtime »Microsoft said in a separate press release.

The two companies have already collaborated on the release of the PyTorch framework, which since 2016 has been one of the most important companies used to train neural networks for artificial intelligence and deep learning. The two then partnered to create the PyTorch Foundation, which launched in September 2022 and today runs the framework. After Microsoft, Amazon Web Services (AWS) and Hugging Face customers will also have access to LLaMA 2.

LLaMA 2 vs. LLaMA 1

If the press release didn’t really look at the differences between LLaMA 1 and LLaMA 2, that’s something else entirely. Website for the language model. Thus, we learn that the second generation trained on a 40% larger database than the first generation. For better understanding, better predictions, and more relevant text generation, Meta says LLaMA 2 has doubled it “context length”context length. It is the element that determines the performance of the results, but for the language model, it is also the element that makes the process slower.

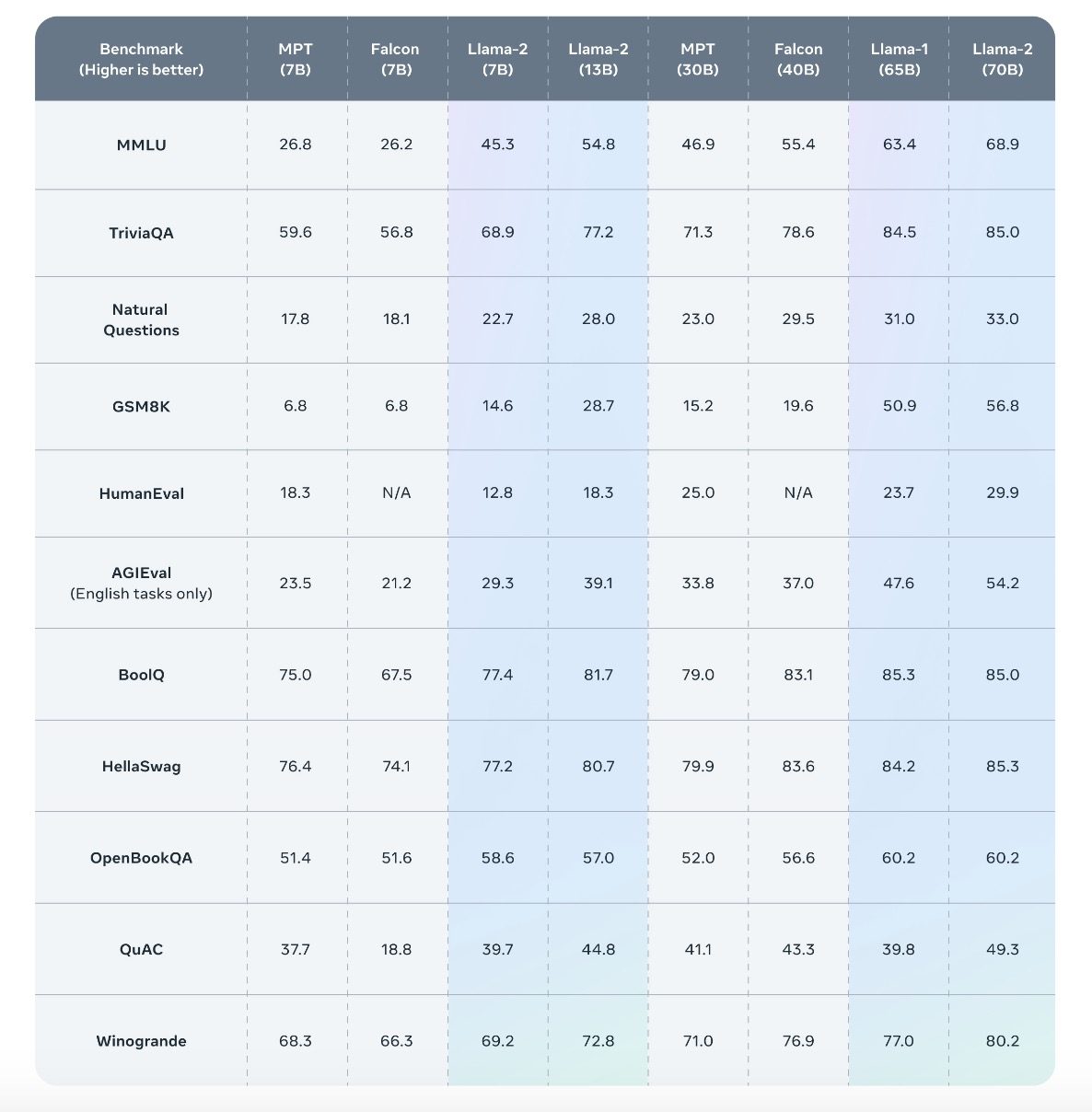

In terms of speed, Meta has published several results for its benchmarks, comparing them to those of some competitors (Google and OpenAI are not mentioned) as well as different versions of LLaMA 1. On the graph, MPT stands for Open Source Language Model for MosaicML, and Falcon stands for Source Language Model for MosaicML. Open to the Abu Dhabi Institute for Technological Innovation, which arrived last June and which particularly surprised for its performance. According to Meta, its best model at 70B parameters will be better than the Falcon at 40B parameters.

From February to date, Meta says it has received 100,000 requests for access to the LLaMA 1 model. Like the Falcon, or Google and OpenAI models. The race will continue as it is: to deliver models that are more motivating, clearer for the uses of “fine-tuned” versions, but also lighter, to be able to blend into machines more accessible, including in academia and work.

“Incurable web evangelist. Hipster-friendly gamer. Award-winning entrepreneur. Falls down a lot.”